Your business app & the web. So near and yet so far?

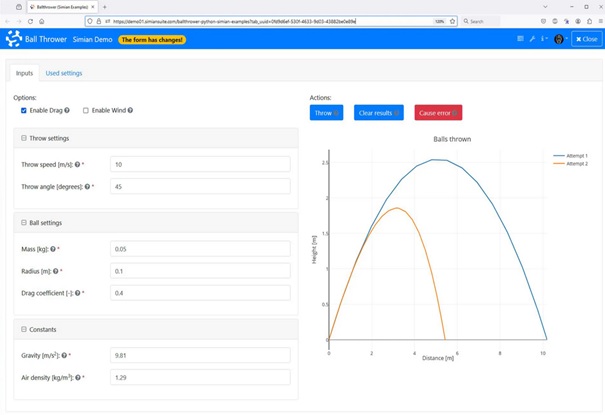

Business web apps. Empower yourself with Simian, or opt for our development service model.

Fuel your innovation with captivating apps. Cost effective, time efficient, secure and perfectly aligned with your business goals. Build low-code web apps yourself with Simian Web Apps , or let us handle development for you. Surpass traditional development methods multiple times over, all while removing burdensome IT dependencies.

Integration and cloud adoption. Deploy with confidence.

Capture complex workflows and software integration challenges with confidence. Our cloud-agnostic, language-agnostic approach ensures swift implementation, allowing you to effortlessly share with your audience. Let's propel your innovation forward, together.